As my previous post just mentioned, mdds 0.6.0 is finally released which contains two new data structures: multi_type_vector and multi_type_matrix. I’d like to explain a little more about multi_type_vector in this post because, of all the data structures I’ve added to mdds over the course of its project life, I firmly believe this structure deserves some explanation.

What motivated multi_type_vector

The initial idea for this structure came from a discussion I had with Michael Meeks over two years ago in Nuremberg, Germany. Back then, he was dumping his idea on me about how to optimize cell storage in LibreOffice Calc, and his idea was that, instead of storing cell values wrapped around cell objects allocated on the heap and storing them in a column array, we store raw cell values directly in an array without the cell object wrappers. This way, if you have a column filled with numbers from top down, those values are guaranteed to be placed in a contiguous region in memory space which is more likely to be in the same memory page unless their size exceeds the memory page size. By contrast, if you store cell values wrapped inside cell objects that are allocated on the heap, those values are most likely scattered all around the memory space and probably located in many different memory pages.

Now, one of the most common operations that typical spreadsheet users do is to operate on numbers in cells. It could be summing up their totals, calculating their average, determining their minimum and maximum values and so on and so forth. To make these operations happen, the program first needs to fetch all the cell values before it can work on them.

Assume that these values are stored inside cell objects which are located in hundreds of memory pages. The mere action of fetching the cell values alone requires loading all of these memory pages, which causes the CPU to fetch them from the main memory in order to access them. Worse, if some of those pages are located in instead of the physical memory space but in the virtual memory space, it causes page fault, which further degrades performance since that particular memory page must be swapped in from disk. In contrast, if they are all located in a single memory page (or just several of them instead of hundreds), it just needs to fetch just once or several times, depending on the size of the data being fetched.

Moreover, most CPUs these days come equipped with CPU caches to cache recently-fetched memory pages in order to speed up subsequent access to them. Because of this, keeping all your data in the same page reduces the chance of the CPU fetching it from the main memory (or the worse case from the virtual memory), which is slower than fetching it from the caches.

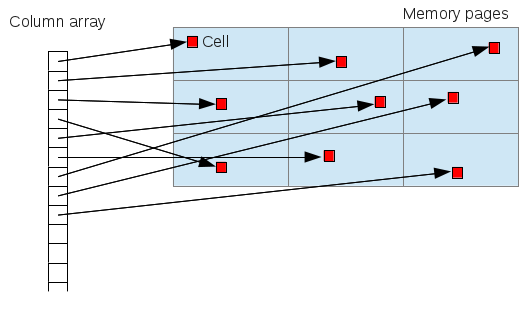

Let’s visualize this idea for a moment. The current cell storage looks like this:

As you can see, cells are scattered in different pages. To access them all, you need to load all of these pages that contain the requested cell objects.

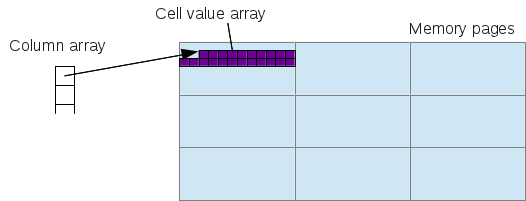

Compare that with the following illustration:

where all requested cell values are stored in a single array that’s located in a single page. I hope it’s obvious by now which one actually fetches data faster.

Calc currently employs the former storage model, and our hope is to make Calc’s storage model more efficient both space- and time-wise by switching to the latter model.

Applying this to the design

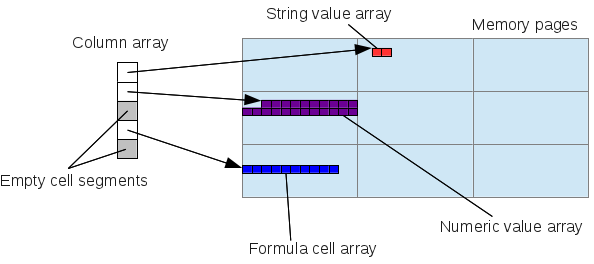

One difficulty with applying this concept to column storage is that, a column in a typical spreadsheet application allows you to store values of different types. Cells containing a bunch of test scores may have in the same column a title cell at the top that stores the text “Score”. Likewise, those test scores may be followed by an empty cell followed by a bunch of formula cells containing formula expressions summing, averaging, or counting the test scores. Since one array can only hold values of identical type, this requires us to use a separate array for each segment of identical cell type.

With that, the column storage structure becomes somewhat like this:

An empty cell segment doesn’t store any value array, but it does store its size which is necessary to calculate the logical position of the next non-empty element.

This is the basic design of the multi_type_vector structure. It stores values of each identical type in a single, secondary value array while the primary column array stores the memory locations of all secondary value arrays. It’s important to point out that, while I used the spreadsheet use case as an example to explain the basic idea of the structure, the structure itself can be used in other, much broader use cases, and is not specific to spreadsheet applications.

In the next section, I will talk about challenges I have faced while implementing this structure. But first one terminology note: from now on I will use the term “element block” (or simply “block”) to refer to what was referred to as “secondary value array” up to this point. I use this name in my implementation code too, so using this name makes easier for me to explain things.

The challenges

The basic design of multi_type_vector is not that complicated and was not very challenging to understand and implement. What was more challenging was to handle cases where a value, or a series of values, are inserted over a block or blocks of different types. There are a variety of ways to insert new values into this container, and sometimes the new values overlap the existing blocks of different types, or overlap a part of an existing block of the same type and a part of a block of a different type, and so on and so forth. Because the basic design of the container requires that the type of every element block differs from its neighbors’, some data insertions may cause the container to need to re-organize its element block structure. This posed quite a challenge since multi_type_vector supports the following methods of modifications:

- set a single value to overwrite an existing one if any (

set()method, 2-parameter variant), - set a sequence of values to overwrite existing values if any (

set()method, 3-parameter variant), - insert a sequence of values and shift those existing values that occur below the insertion position (

insert()method), - set a segment of existing values empty (

set_empty()method), and - insert a sequence of empty values and shift those existing value that occur below the insertion position (

insert_empty()method),

and each of these scenarios requires different strategy for element block re-organization. Non-overwriting data insertion scenarios (insert() and insert_empty()) were somewhat easier to handle than the overwriting data insertion scenarios (set() and set_empty()), as the latter required more branching and significantly more code to cover all cases.

This challenge was further exacerbated by additional requirement to support a “managed” element block that stores pointers to objects whose life cycle is managed by the block. I decided to add this one for convenience reasons, to allow transitioning the current cell storage model into the new storage model in several phases rather than doing it in one big-bang change. During the transition phase, we will likely convert the number and string cells into raw value element blocks, while keeping more complex cell structures such as formula cells still wrapped in their current form. This means that, during the transition we will have element blocks storing pointers to heap-allocated formula cell objects scattered across memory space. Eventually these formula objects need to be stored in a contiguous memory space but that will have to wait after the transition phase.

Supported data types

Template containers are supposed to work with any custom types, and multi_type_vector is no exception. But unlike most standard template containers which normally have one primary data type (and perhaps another one for associative containers), multi_type_vector allows storage of unspecified numbers of data types.

By default, multi_type_vector supports the following data types: bool, short, unsigned short, int, unsigned int, long, unsigned long, double, and std::string. If these data types are all you need when using multi_type_vector, then you won’t have to do anything extra, and just instantiate the template instance by

typedef mdds::multi_type_vector<mdds::mtv::element_block_func> mtv_type; |

and start using it like so:

mtv_type data(10); // set initial size to 10. // insert values. data.set(0, 1.1); data.set(1, true); data.set(3, std::string("Foo")); ... |

But if you need to store other types of data, you’ll need to do a little more work. Let’s say you have this class type:

class my_custom_foo { .... }; |

and you want to store instances of this class in multi_type_vector. I’ll skip the actual definition of this class, but let’s assume that the basic stuff such as default and copy constructors, equality operator etc are all implemented and working properly.

First, you need to define a unique numeric ID for your custom type. Each element type must be associated with a numeric ID. The IDs for standard data types are defined as follows:

namespace mdds { namespace mtv { typedef int element_t; const element_t element_type_empty = -1; const element_t element_type_numeric = 0; const element_t element_type_string = 1; const element_t element_type_short = 2; const element_t element_type_ushort = 3; const element_t element_type_int = 4; const element_t element_type_uint = 5; const element_t element_type_long = 6; const element_t element_type_ulong = 7; const element_t element_type_boolean = 8; }} |

These values are what gets returned by calling the get_type() method. When you are adding a new data type, you’ll need to add a new ID for it. Here is how to do it the safe way:

const mdds::mtv::element_t element_my_custom_type = mdds::mtv::element_type_user_start; |

The value of element_type_user_start defines the starting number of all custom type IDs. IDs for the standard types all come before this value. If you only want to define one custom type ID, then just using that value will be sufficient. If you need another ID, just add 1 to it and use it for that type. As long as each ID is unique, it doesn’t really matter what their actual values are.

Next, you need to choose the block type. There are 3 block types to choose from:

- mdds::mtv::default_element_block

- mdds::mtv::managed_element_block

- mdds::mtv::noncopyable_managed_element_block

The last 2 are relevant only when you need a managing pointer element block to store heap objects. Right now, let’s just use the default element block for your custom type.

typedef mdds::mtv::default_element_block<element_my_custom_type, my_custom_foo> my_custom_element_block; |

With this, define all necessary callback functions for this custom type via following pre-processor macro:

MDDS_MTV_DEFINE_ELEMENT_CALLBACKS(my_custom_foo, element_my_custom_type, my_custom_foo(), my_custom_element_block) |

Note that these callbacks functions are called from within multi_type_vector via unqualified call, so it’s essential that they are in the same namespace as the custom data type in order to satisfy C++’s argument-dependent lookup rule.

So far so good. The last step that you need to do is to define a structure of element block functions. This is also a boiler plate, and for a single custom type case, you can define something like this:

struct my_element_block_func { static mdds::mtv::base_element_block* create_new_block( mdds::mtv::element_t type, size_t init_size) { switch (type) { case element_my_custom_type: return my_custom_element_block::create_block(init_size); default: return mdds::mtv::element_block_func::create_new_block(type, init_size); } } static mdds::mtv::base_element_block* clone_block(const mdds::mtv::base_element_block& block) { switch (mdds::mtv::get_block_type(block)) { case element_my_custom_type: return my_custom_element_block::clone_block(block); default: return mdds::mtv::element_block_func::clone_block(block); } } static void delete_block(mdds::mtv::base_element_block* p) { if (!p) return; switch (mdds::mtv::get_block_type(*p)) { case element_my_custom_type: my_custom_element_block::delete_block(p); break; default: mdds::mtv::element_block_func::delete_block(p); } } static void resize_block(mdds::mtv::base_element_block& block, size_t new_size) { switch (mdds::mtv::get_block_type(block)) { case element_my_custom_type: my_custom_element_block::resize_block(block, new_size); break; default: mdds::mtv::element_block_func::resize_block(block, new_size); } } static void print_block(const mdds::mtv::base_element_block& block) { switch (mdds::mtv::get_block_type(block)) { case element_my_custom_type: my_custom_element_block::print_block(block); break; default: mdds::mtv::element_block_func::print_block(block); } } static void erase(mdds::mtv::base_element_block& block, size_t pos) { switch (mdds::mtv::get_block_type(block)) { case element_my_custom_type: my_custom_element_block::erase_block(block, pos); break; default: mdds::mtv::element_block_func::erase(block, pos); } } static void erase(mdds::mtv::base_element_block& block, size_t pos, size_t size) { switch (mdds::mtv::get_block_type(block)) { case element_my_custom_type: my_custom_element_block::erase_block(block, pos, size); break; default: mdds::mtv::element_block_func::erase(block, pos, size); } } static void append_values_from_block( mdds::mtv::base_element_block& dest, const mdds::mtv::base_element_block& src) { switch (mdds::mtv::get_block_type(dest)) { case element_my_custom_type: my_custom_element_block::append_values_from_block(dest, src); break; default: mdds::mtv::element_block_func::append_values_from_block(dest, src); } } static void append_values_from_block( mdds::mtv::base_element_block& dest, const mdds::mtv::base_element_block& src, size_t begin_pos, size_t len) { switch (mdds::mtv::get_block_type(dest)) { case element_my_custom_type: my_custom_element_block::append_values_from_block(dest, src, begin_pos, len); break; default: mdds::mtv::element_block_func::append_values_from_block(dest, src, begin_pos, len); } } static void assign_values_from_block( mdds::mtv::base_element_block& dest, const mdds::mtv::base_element_block& src, size_t begin_pos, size_t len) { switch (mdds::mtv::get_block_type(dest)) { case element_my_custom_type: my_custom_element_block::assign_values_from_block(dest, src, begin_pos, len); break; default: mdds::mtv::element_block_func::assign_values_from_block(dest, src, begin_pos, len); } } static bool equal_block( const mdds::mtv::base_element_block& left, const mdds::mtv::base_element_block& right) { if (mdds::mtv::get_block_type(left) == element_my_custom_type) { if (mdds::mtv::get_block_type(right) != element_my_custom_type) return false; return my_custom_element_block::get(left) == my_custom_element_block::get(right); } else if (mdds::mtv::get_block_type(right) == element_my_custom_type) return false; return mdds::mtv::element_block_func::equal_block(left, right); } static void overwrite_values(mdds::mtv::base_element_block& block, size_t pos, size_t len) { switch (mdds::mtv::get_block_type(block)) { case element_my_custom_type: // Do nothing. The client code manages the life cycle of these cells. break; default: mdds::mtv::element_block_func::overwrite_values(block, pos, len); } } }; |

This is quite a bit of code, I know. I should definitely work on making it a bit simpler to use with a lot less typing in future versions of mdds. Anyway, with this in place, we can finally define the multi_type_vector type:

typedef mdds::multi_type_vector<my_element_block_func> my_mtv_type; |

With all these bits in place, you can finally start using this container:

my_mtv_type data(10); my_custom_foo foo; data.set(0, foo); // Insert a custom data element. data.set(1, 12.3); // You can still use the standard data types. ... |

That’s all I will talk about custom data types for now. I hope this gives you a glimpse of how this container works in general.

Future work

Since this is the very first incarnation of multi_type_vector, I have no doubt this still has a lot of issues to be worked out. One immediate issue that comes to mind is the performance of element position lookup. Given a logical position of the element, the container first has to locate the right element block that stores the specified element, but this lookup always happens from the first element block. So, if you are doing a continuous lookup of million’s of elements in a loop, the overall lookup speed can be quite slow since each lookup starts from the first block. Speeding up this operation is certainly a task to be worked on in the near future. Meanwhile, the user of this container can resort to using the iterators to iterate through the element blocks and their member elements.

Another issue is the verbosity of the element block function structure required for custom element blocks. This can be worked out by providing templatized structures per number of custom data types. This one is probably easier to solve, and I should look into that soon.

That’s all for now, ladies and gentlemen!