Introduction

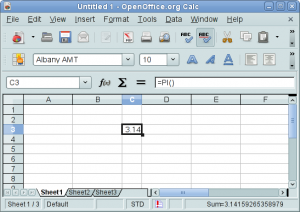

With the child work space (CWS) koheirowlimitperf being marked ready for QA, I believe this is a good time to talk about the change that the CWS will bring once it gets integrated.

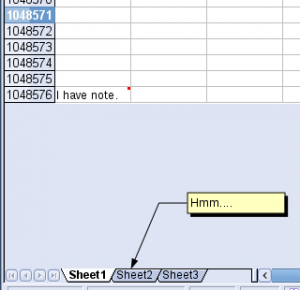

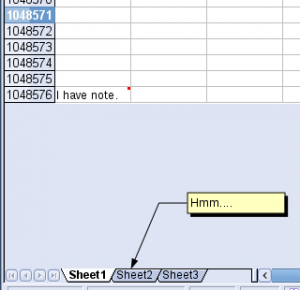

The role of this CWS is to upstream various pieces of performance optimization from Go-OO, that arose from the increase of the row limit from 65536 (64 thousand rows) to 1048576 (1 million rows). However, the upstream build will not see the increase of the row limit itself yet, as the upstream developers still consider that move premature due to two outstanding issues that are show stoppers for them. I’ll talk more about those issues later.

What this CWS does change is the storage of row attributes to 1) improve performance of querying the attributes, and to 2) make extra information available that can be used to make the algorithm of various bits of operations more efficient. The CWS also makes several other changes in order to improve performance in general, though not related to the change in the row attribute storage.

Limitation of the old attribute storage

Before I talk about how the row attributes are stored in the new storage, I’d like to talk about the limitation of the old attribute storage, and why it was not adequate when the row limit was raised to 1 million rows. Also, in this article, I’ll only talk about row attribute storage, but the same argument also applies to the column attribute storage as well.

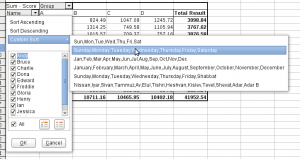

The old attribute container was designed to store several different attributes altogether, namely,

- hidden state,

- filtered state,

- automatic page break position,

- manual page break position, and

- whether or not a row has a manual height.

They were stored per range, not per individual row or column, so that if a range of rows had identical set of attribute values over the entire range, that attribute set would be stored as a single record. Searching for the value of an attribute for an arbitrary row was performed linearly from the first record since the core of the container itself was simply just an array.

There were primarily two problems with this storage scheme that made the container non-scalable. First, the attributes stored together in this container had different distribution patterns, which caused over-partitioning of the container and unnecessarily slowed down the queries of all stored attributes.

For instance, the hidden and filtered attributes are distributed in a very similar manner, but the manual height attribute is not necessarily distributed in a manner similar to these attributes. Because of this, storing that together with the hidden and filtered attributes unnecessarily increased the partition count, which in turn would slow down the query speed of all three attributes.

Even more problematic was the automatic page break attributes; because the automatic page breaks always need to be set for the entire sheet, increasing the row limit significantly raised the partition count. On top of that, the page breaks themselves are actually single-row attributes; it made little sense to store them in a container that was range-based.

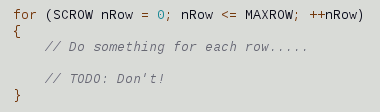

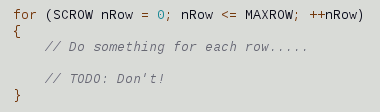

This over-partitioning problem led to the second problem; when the container was over-partitioned, querying for an attribute value would become very slow due to the linear search algorithm used in the query, and this algorithm scales with the number of partitions. Because row attributes are used extensively in many areas of Calc’s operations, and often times in loops, the degradation of its lookup performance caused all sorts of interesting performance problems when the row limit was raised to 1 million.

New way of storing row attributes

Separation of row attributes

The first step in speeding up storage and lookup of row attributes is to separate them into own containers, to avoid the storage of one attribute affecting the storage of another. It was natural to use a range-based container to store the hidden, filtered and the manual height attributes, since these attributes typically span over many consecutive rows. The page break positions, on the other hand, should be stored as point values as opposed to range values since they rarely occur in ranges and they are always set to individual rows.

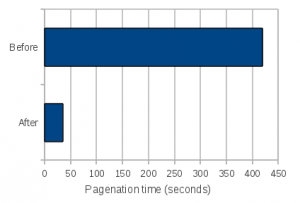

I picked STL’s std::set container to store the automatic and manual page break positions (they are stored separately in two set containers). That alone resulted in significantly speeding up the sheet pagination performance, whose performance previously suffered due to the poor storage speed of the old container. Later on I improved the pagination performance even further by modifying the pagination algorithm itself, but more on that later.

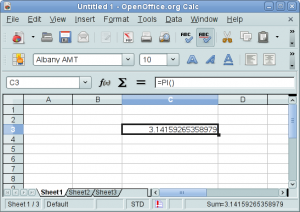

For the hidden and filtered attributes, I picked a data structure that I call the flat segment tree, which I designed and implemented specifically for this purpose. Row heights are also stored in the flat segment tree.

Flat segment tree

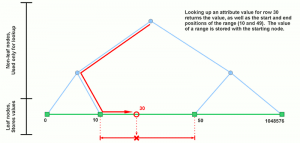

I named this data structure “flat segment tree” because it is a modified version of a data structure known as the segment tree, and unlike the original segment tree which supports storage of overlapping ranges, my version only stores non-overlapping ranges, hence the name “flat”. The structure of the flat segment tree largely resembles that of the original segment tree; it consists of a balanced binary tree with its leaf nodes storing the values while its non-leaf nodes storing auxiliary data used only for querying purposes. The leaf nodes are doubly-linked, allowing them a quick access to their neighboring nodes. Since ranges never overlap with each other in this data structure, one leaf node represents the end of a preceding range and the start of the range that follows. Last but not least, this data structure is a template, and allows you to specify the types of both key and value.

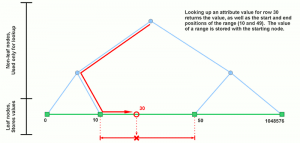

There are three advantages of using this data structure: 1) compactness of storage since only the range boundaries are stored as nodes, 2) reasonably fast lookup thanks to its tree structure, and 3) a single query of a stored value also returns the lower and upper boundary positions of that range with no additional overhead. The last point is very important which I will explain later in the next section.

As an additional rule, the flat segment tree guarantees that the values of adjacent ranges are always different. There is no exception to this rule, so you can take advantage of this when you use this structure in your code.

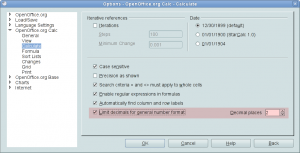

Also, please do keep in mind that, while the lookup of a value is reasonably fast, it is not without an overhead. So, you are discouraged to perform, say, lookup for every single row when you are iterating through a series of rows in a loop. Instead, do make use of the range boundary info judiciously to skip ahead in such situation.

This data structure is distributed independently of the OOo code base, licensed under MIT/X11. You can find the source code at http://code.google.com/p/multidimalgorithm/. That project includes other data structures than the flat segment tree; however, only the flat segment tree is currently usable by 3rd party programs; the implementations of other structures are still in an experimental stage, and need to be properly templetized before becoming usable in general. Even the flat segment tree is largely undocumented. This is intentional since the API is still not entirely frozen and is subject to change in future versions. You have been warned.

Loop count reduction

Aside from the aforementioned improvement associated with the row attribute storage, I also worked on improving various algorithms used throughout Calc’s core, by taking advantage of one feature of the new data structure.

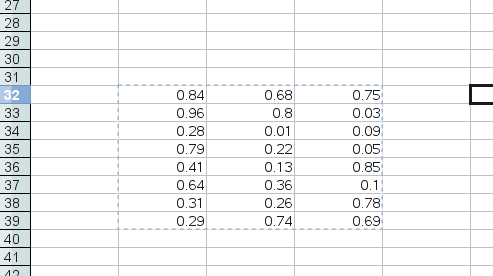

As I mentioned earlier, the flat segment tree returns the lower and upper boundary positions of the range as part of a normal value query. You can make use of this extra piece of information to significantly reduce the number of loops in an algorithm that loops through a wide row range. Put it another way, since you already know the attribute value associated with that range, and you also know the start and end positions of that range, you don’t need to query the value for every single row position within that range, thus reducing the number of iterations in the loop. And the reduction of the loop count means the reduction of the time required to complete that operation, resulting in a better performance.

That’s the gist of the performance improvement work I did in various parts of Calc, though there were slight variations depending on which part of Calc’s code I worked on. In summary, the following areas received significant performance improvement:

- Sheet pagination, which consisted of loops in which numerous calls are made to query row’s hidden states and row height values.

- Print preview, mostly due to the improvement of the pagination performance.

- Calculation of drawing object’s vertical position

I’m sure there are other areas where the performance still needs improvement. As this is an on-going effort, we will work on resolving any other outstanding issues as we discover them.

Other related work

In addition to the re-work of the row attribute storage and the performance improvement involving the row attribute queries, I’ve also made other changes to improve performance and ensure that Calc’s basic usability is not sacrificed.

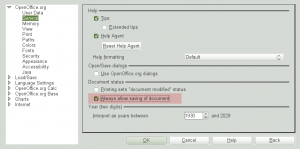

Removal of redundant pagination

Prior to my row limit increase work, Calc would re-calculate page break positions again and again even when no changes were made to the document that would alter page break positions, such as changing row heights, filtering rows, inserting manual page breaks, and so on. Because the pagination operation became much more expensive after the row limit increase, I have decided to remove this redundancy so that the re-pagination is done only when necessary. This change especially made huge impact in print preview performance, since (for whatever reason) Calc was performing full pagination every time you move the mouse cursor within the preview pane, even when the movement was only by one pixel! Removal of such redundant re-pagination has brought sanity back to the print preview experience.

Efficient zoom level calculation

The row limit increase also caused the performance degradation of calculating the correct zoom size to fit the document within specified page size. Calc does this when you specify your document to “fit within n pages wide and y pages tall” or “fit to n pages in total”. The root cause was again in the degraded performance in pagination. This time, however, I could not use the trick of “performing pagination only once”, because we do need to perform full pagination continuously at different zoom levels in order to find a correct zoom level.

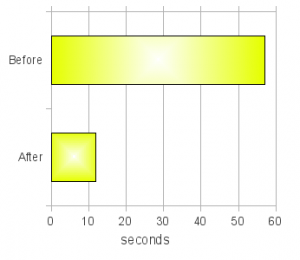

The solution I employed was to reduce the number of re-pagination by using the bisection method to arrive at the correct zoom level. The old code worked like this:

- Initialize the zoom level to 100%, and perform full pagination.

- If that doesn’t fit the required page size, decrement the zoom level by 1%, and perform full pagination once again.

- If that doesn’t fit, decrement the zoom level by 1%, and try again.

- Continue this until the correct zoom level is reached.

Of course, if the correct zoom level is far below the initial value of 100%, this algorithm is not very efficient. If the desired zoom level is 35%, for example, Calc would need to perform full pagination 66 times. Switching to the bisection method here reduced the full pagination count roughly down to the neighborhood of 5 or 6. At the time I worked on this, each full pagination took about 1 second. So the reduction of pagination count from 66 to 5 roughly translated to the reduction of the zoom level calculation from 1 minute to 5 seconds. Suffice it to say that this made a big difference.

Even better news is that the performance of this operation is much faster today, thanks to the improvement I made in the pagination performance in general.

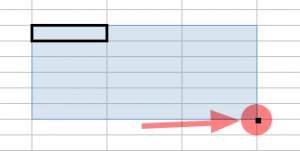

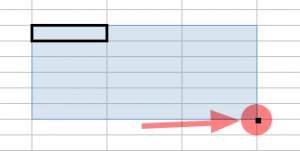

Calculation of autofill marker position

When making a selection, Calc puts the little square at the lower-right corner of the selection. That’s called an autofill marker, and it’s there to let you drag selection to fill values.

Interestingly, calculating its position (especially its vertical position) turned out to be a very slow operation when the marker was positioned close to the bottom of the sheet. Worse is the fact that Calc calculated its position even when it was outside the visible area. The slowdown caused by this was apparent especially when making column selection. Because selecting columns always places the autofill marker at the last row of the sheet, increasing the row limit made that process sluggish. The solution was to simply detect whether the autofill marker is outside the visible area, and if it is, skip calculating its position (since there is no point calculating its position if we don’t need to display it). That made the process of column selection back to normal again.

However, the sluggishness of making selection can still manifest itself under the right (wrong?) condition even with this change. We still need to speed up the calculation of its vertical position, by improving the calculation algorithm itself.

Show stoppers for the upstream build

I sat down and briefly discussed with Niklas Nebel and Eike Rathke, Sun’s Calc co-leads, when we met during last year’s OOoCon in Orvieto, about the possibility of increasing the row limit in the upstream version of Calc. During our discussion, I was told that, in addition to the general performance issues most of which I’ve already resolved, we will need to resolve at least two more outstanding issues before they can set the row limit to 1 million in the upstream build.

First, we need to improve the performance of the formula calculation and the value change propagation mechanism (that we call “broadcasting”). The existing implementation is still tuned for the grid size of 65536 rows; we need to re-tune that for 1 million rows and to ensure that the performance will not suffer after the row limit increase.

Second, we need to resolve the incorrect positioning of drawing objects at higher row positions. This one is somewhat tricky, since the drawing objects are drawn entirely independent of the sheet grid, and the coerce resolution of the drawing layer causes the vertical position of a drawing object to deviate from its intended position. Generally speaking, the higher the row position the more deviation results. With the maximum of 65536 rows, however, it was not such a big issue since the amount of deviation was barely noticeable even at the highest row position. But because the problem becomes much more noticeable with 1 million rows, this needs to be addressed somehow.

Going forward…

Going forward, I will continue to hunt for the remaining performance issues, and squash them one by one. The major ones should all be resolved by now, so what’s remaining should be some corner case issues, performance-wise. As for the two outstanding issues I mentioned in the previous section, we will have to take a good look at them at some point. Whether or not they are really show stoppers is somewhat subject to personal view point, but they are real issues needing resolution, for sure, no matter what their perceived severity is.

Also, as of this writing, the manual row size attribute is still stored in the old, array-based container. It will probably make sense to migrate that to the flat segment tree, so that we can eliminate the old container once and for all, and have a fresh start with the new container. Having said that, doing so would require another round of refactoring of non-trivial scale, it should be conducted with care and proper testing.

The ODS export filter still needs re-work. Currently, all row attributes which are now stored separately, are temporarily merged back into the old array-based container before exporting the document to ODS. The reason is that the ODS export filter code still expects the partitioning behavior of the old container during the export of row styles. In order to fully embrace the new storage of row attributes, that code needs to be adjusted to work with the new storage scheme. Again, this will require a non-trivial amount of code change, thus should be conducted with care.

Calculation of vertical position of various objects, such as the autofill marker can still use some algorithmic improvement. We can make them more efficient by taking advantage of the flat segment tree in a way similar to how the pagination algorithm was made more efficient.

Conclusion

This concludes my write-up on the current status of Calc’s row limit increase work. I hope I’ve made it clear that work is underway toward making that happen without degrading Calc’s basic usability. As a matter of fact, the row limit has already been increased to 1 million in some variants of OOo, such as Go-OO. I believe we’ll be able to increase the row limit in the upstream version in the not-so-distant future as long as we keep working at the remaining issues.

That’s all I have to say for now. Thank you very much, ladies and gentlemen.