I spent my entire last week on my personal project, by taking advantage of Novell’s HackWeek. Officially, HackWeek took place two weeks ago, but because I had to travel that week I postponed mine till the following week.

Ixion is the project I worked on as part of my HackWeek. This project is an experimental effort to develop a stand-alone library that supports parallel computation of formula expressions using threads. I’d been working on this on and off in my spare time, but when the opportunity came along to spend one week of my paid time on any project of my choice (personal or otherwise), I didn’t hesitate to pick Ixion.

Overview

So, what’s Ixion? Ixion aims to provide a library for calculating the results of formula expressions stored in multiple named targets, or “cells”. The cells can be referenced from each other, and the library takes care of resolving their dependencies automatically upon calculation. The caller can run the calculation routine either in a single-threaded mode, or a multi-threaded mode. The library also supports re-calculation where the contents of one or more cells have been modified since the last calculation, and a partial calculation of only the affected cells gets performed. It is written entirely in C++, and makes extensive use of the boost library to achieve portability across different platforms. It has currently been tested to build on Linux and Windows.

The goal is to eventually bring this library up to the level where it can serve as a full-featured calculation engine for spreadsheet applications. But right now, this project remains as an experimental, proof-of-concept project to help me understand what is required to build a threaded calculation engine capable of performing all sorts of tasks required in a typical spreadsheet app.

I consider this project a library project; however, building this project only creates a single stand-alone console application at the moment. I plan to separate it into a shared library and a front-end executable in the future, to allow external apps to dynamically link to it.

How it works

Building this project creates an executable called ixion-parser. Running it with a -h option displays the following help content:

Usage: ixion-parser [options] FILE1 FILE2 ...

The FILE must contain the definitions of cells according to the cell definition rule.

Allowed options:

-h [ --help ] print this help.

-t [ --thread ] arg specify the number of threads to use for calculation.

Note that the number specified by this option

corresponds with the number of calculation threads i.e.

those child threads that perform cell interpretations.

The main thread does not perform any calculations;

instead, it creates a new child thread to manage the

calculation threads, the number of which is specified

by the arg. Therefore, the total number of threads

used by this program will be arg + 2. |

Usage: ixion-parser [options] FILE1 FILE2 ...

The FILE must contain the definitions of cells according to the cell definition rule.

Allowed options:

-h [ --help ] print this help.

-t [ --thread ] arg specify the number of threads to use for calculation.

Note that the number specified by this option

corresponds with the number of calculation threads i.e.

those child threads that perform cell interpretations.

The main thread does not perform any calculations;

instead, it creates a new child thread to manage the

calculation threads, the number of which is specified

by the arg. Therefore, the total number of threads

used by this program will be arg + 2.

The parser expects one or more cell definition files as arguments. A cell definition file may look like this:

%mode init

A1=1

A2=A1+10

A3=A2+A1*30

A4=(10+20)*A2

A5=A1-A2+A3*A4

A6=A1+A3

A7=A7

A8=10/0

A9=A8

%calc

%mode result

A1=1

A2=11

A3=41

A4=330

A5=13520

A6=42

A7=#REF!

A8=#DIV/0!

A9=#DIV/0!

%check

%mode edit

A6=A1+A2

%recalc

%mode result

A1=1

A2=11

A3=41

A4=330

A5=13520

A6=12

A7=#REF!

A8=#DIV/0!

A9=#DIV/0!

%check

%mode edit

A1=10

%recalc |

%mode init

A1=1

A2=A1+10

A3=A2+A1*30

A4=(10+20)*A2

A5=A1-A2+A3*A4

A6=A1+A3

A7=A7

A8=10/0

A9=A8

%calc

%mode result

A1=1

A2=11

A3=41

A4=330

A5=13520

A6=42

A7=#REF!

A8=#DIV/0!

A9=#DIV/0!

%check

%mode edit

A6=A1+A2

%recalc

%mode result

A1=1

A2=11

A3=41

A4=330

A5=13520

A6=12

A7=#REF!

A8=#DIV/0!

A9=#DIV/0!

%check

%mode edit

A1=10

%recalc

I hope the format of the cell definition rule is straightforward. The definitions are read from top down. I used the so-called A1 notation to name target cells, but it doesn’t have to be that way. You can use any naming scheme to name target cells as long as the lexer recognizes them as names. Also, the format supports a command construct; a line beginning with a ‘%’ is considered a command. Several commands are currently available. For instance the mode command lets you switch input modes. The parser currently supports three input modes:

-

init – initialize cells with specified contents.

-

result – pick up expected results for cells, for verification.

-

edit – modify cell contents.

In addition to the mode command, the following commands are also supported:

-

calc – perform full calculation, by resetting the cached results of all involved cells.

-

recalc – perform partial re-calculation of modified cells and cells that reference modified cells, either directly or indirectly.

-

check – verify the calculation results.

Given all this, let’s see what happens when you run the parser with the above cell definition file.

./ixion-parser -t 4 test/01-simple-arithmetic.txt

Using 4 threads

Number of CPUS: 4

---------------------------------------------------------

parsing test/01-simple-arithmetic.txt

---------------------------------------------------------

A1: 1

A1: result = 1

---------------------------------------------------------

A2: A1+10

A2: result = 11

---------------------------------------------------------

A3: A2+A1*30

A3: result = 41

---------------------------------------------------------

A4: (10+20)*A2

A4: result = 330

---------------------------------------------------------

A5: A1-A2+A3*A4

A5: result = 13520

---------------------------------------------------------

A8: 10/0

result = #DIV/0!

---------------------------------------------------------

A6: A1+A3

A6: result = 42

---------------------------------------------------------

A9:

result = #DIV/0!

---------------------------------------------------------

A7: result = #REF!

---------------------------------------------------------

checking results

---------------------------------------------------------

A2 : 11

A8 : #DIV/0!

A3 : 41

A9 : #DIV/0!

A4 : 330

A5 : 13520

A6 : 42

A7 : #REF!

A1 : 1

---------------------------------------------------------

recalculating

---------------------------------------------------------

A6: A1+A2

A6: result = 12

---------------------------------------------------------

checking results

---------------------------------------------------------

A2 : 11

A8 : #DIV/0!

A3 : 41

A9 : #DIV/0!

A4 : 330

A5 : 13520

A6 : 12

A7 : #REF!

A1 : 1

---------------------------------------------------------

recalculating

---------------------------------------------------------

A1: 10

A1: result = 10

---------------------------------------------------------

A2: A1+10

A2: result = 20

---------------------------------------------------------

A3: A2+A1*30

A3: result = 320

---------------------------------------------------------

A4: (10+20)*A2

A4: result = 600

---------------------------------------------------------

A5: A1-A2+A3*A4

A5: result = 191990

---------------------------------------------------------

A6: A1+A2

A6: result = 30

---------------------------------------------------------

(duration: 0.00113601 sec)

--------------------------------------------------------- |

./ixion-parser -t 4 test/01-simple-arithmetic.txt

Using 4 threads

Number of CPUS: 4

---------------------------------------------------------

parsing test/01-simple-arithmetic.txt

---------------------------------------------------------

A1: 1

A1: result = 1

---------------------------------------------------------

A2: A1+10

A2: result = 11

---------------------------------------------------------

A3: A2+A1*30

A3: result = 41

---------------------------------------------------------

A4: (10+20)*A2

A4: result = 330

---------------------------------------------------------

A5: A1-A2+A3*A4

A5: result = 13520

---------------------------------------------------------

A8: 10/0

result = #DIV/0!

---------------------------------------------------------

A6: A1+A3

A6: result = 42

---------------------------------------------------------

A9:

result = #DIV/0!

---------------------------------------------------------

A7: result = #REF!

---------------------------------------------------------

checking results

---------------------------------------------------------

A2 : 11

A8 : #DIV/0!

A3 : 41

A9 : #DIV/0!

A4 : 330

A5 : 13520

A6 : 42

A7 : #REF!

A1 : 1

---------------------------------------------------------

recalculating

---------------------------------------------------------

A6: A1+A2

A6: result = 12

---------------------------------------------------------

checking results

---------------------------------------------------------

A2 : 11

A8 : #DIV/0!

A3 : 41

A9 : #DIV/0!

A4 : 330

A5 : 13520

A6 : 12

A7 : #REF!

A1 : 1

---------------------------------------------------------

recalculating

---------------------------------------------------------

A1: 10

A1: result = 10

---------------------------------------------------------

A2: A1+10

A2: result = 20

---------------------------------------------------------

A3: A2+A1*30

A3: result = 320

---------------------------------------------------------

A4: (10+20)*A2

A4: result = 600

---------------------------------------------------------

A5: A1-A2+A3*A4

A5: result = 191990

---------------------------------------------------------

A6: A1+A2

A6: result = 30

---------------------------------------------------------

(duration: 0.00113601 sec)

---------------------------------------------------------

Notice that at the beginning of the output, it displays the number of threads being used, and the number of “CPU”s it detected. Here, the “CPU” may refer to the number of physical CPUs, the number of cores, or the number of hyper-threading units. I’m well aware that I need to use a different term for this other than “CPU”, but anyways… The number of child threads to use to perform calculation can be specified at run-time via -t option. When running without the -t option, the parser will run in a single-threaded mode. Now, let me go over what the above output means.

The first calculation performed is a full calculation. Since no cells have been calculated yet, we need to calculate results for all defined cells. This is followed by a verification of the initial calculation. After this, we modify cell A6, and perform partial re-calculation. Since no other cells depend on the result of cell A6, the re-calc only calculates A6.

Now, the third calculation is also a partial re-calculation following the modification of cell A1. This time, because several other cells do depend on the result of A1, those cells also need to be re-calculated. The end result is that cells A1, A2, A3, A4, A5 and A6 all get re-calculated.

Under the hood

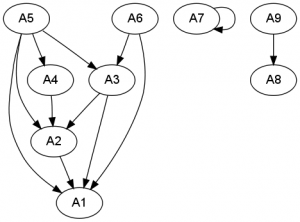

Cell dependency resolution

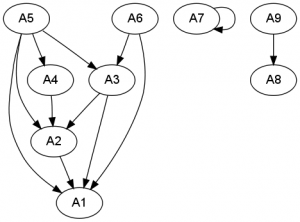

There are several technical aspects of the implementation of this library I’d like to cover. The first is cell dependency resolution. I use a well-known algorithm called topological sort to sort cells in order of dependency so that cells can be calculated one by one without being blocked by the calculation of precedent cells. Topological sort is typically used to manage scheduling of tasks that are inter-dependent with each other, and it was a perfect one to use to resolve cell dependencies. This algorithm is a by-product of depth first search of directed acyclic graph (DAG), and is well-documented. A quick google search should give you tons of pseudo code examples of this algorithm. This algorithm work well both for full calculation and partial re-calculation routines.

Managing threaded calculation

The heart of this project is to implement parallel evaluation of formula expressions, which has been my number 1 goal from the get-go. This is also the reason why I put my focus on designing the threaded calculation engine as my initial goal before I start putting my focus into other areas. Programming with threads was also very new to me, so I took extra care to ensure that I understand what I’m doing, and I’m designing it correctly. Also, designing a framework that uses multiple threads can easily get out-of-hand and out-of-control when it’s overdone. So, I made an extra effort to limit the area where multiple threads are used while keeping the rest of the code single-threaded, in order to keep the code simple and maintainable.

As I soon realized, even knowing the basics of programming with threads, you are not immune to falling into many pitfalls that may arise during the actual designing and debugging of concurrent code. You have to go extra miles ensuring that access to thread-global data are synchronized, and that one thread waits for another thread in case threads must be executed in certain order. These things may sound like common sense and probably are in every thread programming text book, but in reality they are very easy to overlook, especially to those who have not had substantial exposure to concurrency before. Parallelism seemed that un-orthodox to conventional minds like myself. Having said all that, once you go through enough pain dealing with concurrency, it does become less painful after a while. Your mind can simply adjust to “thinking in parallel”.

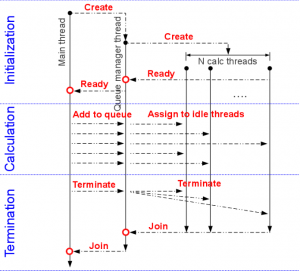

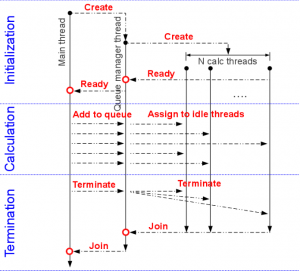

Back to the topic. I’ve picked the following pattern to manage threaded calculation.

First, the main thread creates a new thread whose job is to manage cell queues, that is, receiving queues from the main thread and assigning them to idle threads to perform calculation. It is also responsible for keeping track of which threads are idle and ready to take on a cell assignment. Let’s call this thread a queue manager thread. When the queue manager thread is created, it spawns a specified number of child threads, and waits until they are all ready. These child threads are the ones that perform cell calculation, and we call them calc threads.

Each calc thread registers itself as an idle thread upon creation, then sleeps until the queue manager thread assigns it a cell to calculate and signals it to wake up. Once awake, it calculates the cell, registers itself as an idle thread once again and goes back to sleep. This cycle continues until the queue manager thread sends a termination request to it, after which it breaks out of the cycle and reaches the end of its execution path to terminate.

The role of the queue manager thread is to receive cell calculation requests from the main thread and pass them on to idle calc threads. It keeps doing it until it receives a termination request from the main thread. Once receiving the termination request from the main thread, it sends all the remaining cells in queue to the calc threads to finish up, then sends termination requests to the calc threads and wait until all of them terminate.

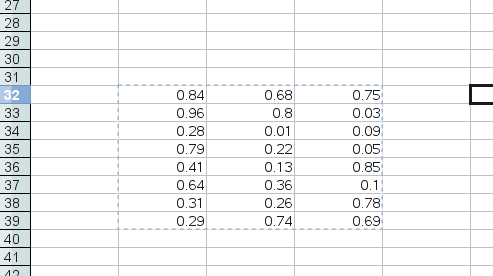

Thanks to the cells being sorted in topological order, the process of putting a cell in queue and having a calc thread perform calculation is entirely asynchronous. The only exception is that when referencing another cell during calculation, the result of that referenced cell may not be available at the time of the value query due to concurrency. In such cases, the calculating thread needs to block its execution until the result of the referenced cell becomes available. When running in a single-threaded mode, on the other hand, the result of a referenced cell is guaranteed to be available as long as cells are calculated in topological order and contain no circular references.

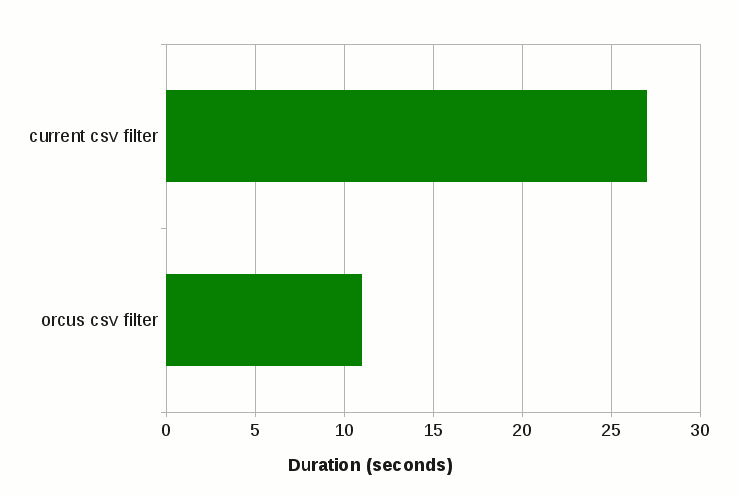

What I accomplished during HackWeek

During HackWeek, I was able to accomplish quite a few things. Before the HackWeek, the threaded calculation framework was not even there; the parser was only able to reliably perform calculation in a single-threaded mode. I had some test code to design the threaded queue management framework, but that code had yet to be integrated into the main formula interpreter code. A lot of work was still needed, but thanks to having an entire week devoted for this, I was able to

- port the test threaded queue manager framework code into the formula interpreter code,

- adopt the circular dependency detection code for the new threaded calculation framework,

- test the new framework to squeeze lots of kinks out,

- put some performance optimization in the cell definition parser and the formula lexer code,

- implement result verification framework, and

- implement partial re-calculation.

Had I had to do all this in my spare time alone, it would have easily taken months. So, I’m very thankful for the event, and I look forward to having another opportunity like this in hopefully not-so-distant future.

What lies ahead

So, what lies ahead for Ixion? You may ask. There are quite a few things to get done. Let me start first by saying that, this library is far from providing all the features that a typical spreadsheet application needs. So, there are still lots of work needed to make it even usable. Moreover, I’m not even sure whether this library will become usable enough for real-world spreadsheet use, or it will simply end up being just another interesting proof-of-concept. My hope is of course to see this library evolve into maturity, but at the same time I’m also aware that it would be hard to advance this project with only my scarce spare time to spend in.

With that said, here are some outstanding issues that I plan on addressing as time permits.

- Add support for literal strings, and support textural formula results in addition to numerical results.

- Add support for empty cells. Empty cells are those cells that are not defined in the model definition file but can still be referenced. Currently, referencing a cell that is not defined causes a reference error.

- Add support for cell ranges. This implies that I need to make cell instances addressable by 3-dimensional coordinates rather than by pointer values.

- Split the code into two parts: a shared library and an executable.

- Use autoconf to make the build process configurable.

- Make the expression parser feature-complete.

- Implement more functions. Currently only MAX and MIN are implemented.

- Support for localized numbers.

- Lots and lots more.

Conclusion

This concludes my HackWeek report. Thank you very much, ladies and gentlemen.